You have two Azure virtual networks named VNet1 and VNet2. VNet1 contains an Azure virtual machine named VM1. VNet2 contains an Azure virtual machine named VM2. VM1 hosts a frontend application that connects to VM2 to retrieve data. Users report that the frontend application is slower than usual. You need to view the average round-trip time (RTT) of the packets from VM1 to VM2. Which Azure Network Watcher feature should you use?

Connection Troubleshoot

IP Flow Verify

Network Security Groups flow logs

Connection Monitor

Connection monitor provides unified and continuous network connectivity monitoring, enabling users to detect anomalies, identify the specific network component responsible for issues, and troubleshoot with actionable insights in Azure and hybrid cloud environments. Connection monitor tests measure aggregated packet loss and network latency metrics across TCP, ICMP, and HTTP pings. A unified topology visualizes the end-to-end network path, highlighting network path hops with hop performance metrics. Connection monitor provides actionable insights and detailed logs to efficiently analyze and troubleshoot the root cause of an issue.

You have an Azure subscription named Subscription 1. You plan to deploy a Ubuntu Server virtual machine named VM1 to Subscription1. You need to perform a custom deployment of the virtual machine. A specific trusted root certification authority (CA) must be added during the deployment. What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. File to create:

Answer.ini

Autounattend.conf

Cloud-init.txt

Unattend.xml

Why Use Cloud-init.txt? Cloud-init is a widely used initialization tool for Linux virtual machines in Azure. It allows for custom configuration during the VM deployment, including adding a trusted root CA certificate. Since Ubuntu Server is a Linux-based OS, it does not use Windows-specific automation files like Unattend.xml or Autounattend.conf. Why Not the Other Options? Answer.ini Not used for VM deployment in Azure. It is typically used for software configuration, not OS-level setup. Autounattend.conf This is a Windows-specific file used for automating Windows VM deployments. Unattend.xml This is another Windows-specific answer file for automating Windows VM setups. How Cloud-init Works for This Case You can use a Cloud-init script (Cloud-init.txt) to configure the Ubuntu VM during provisioning. It can include commands to install packages, set up users, and add trusted CA certificates.

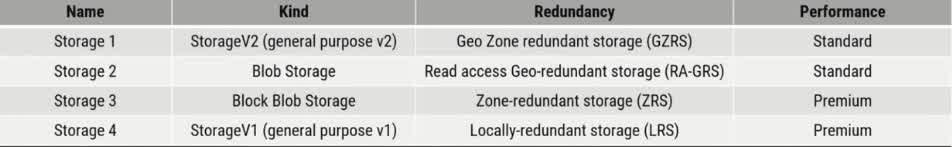

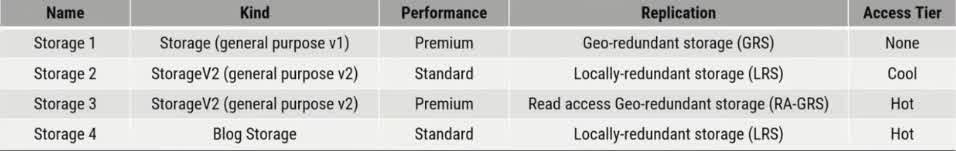

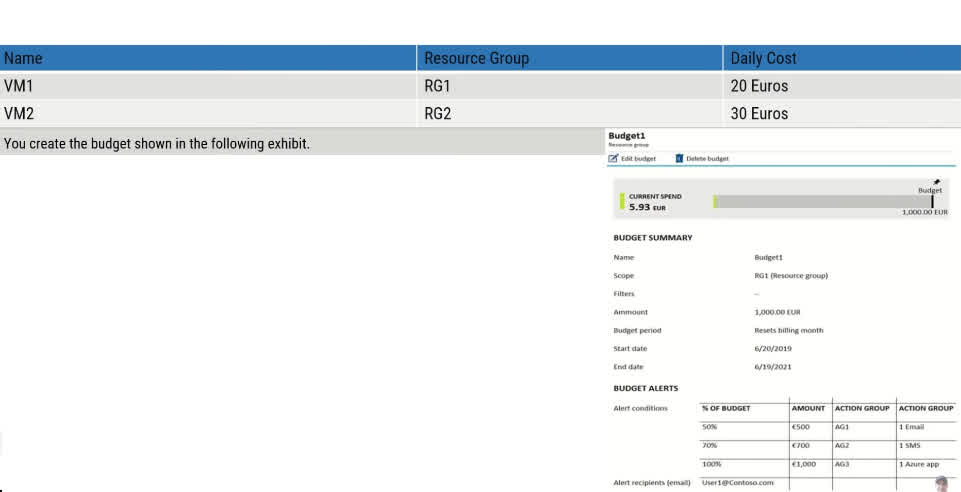

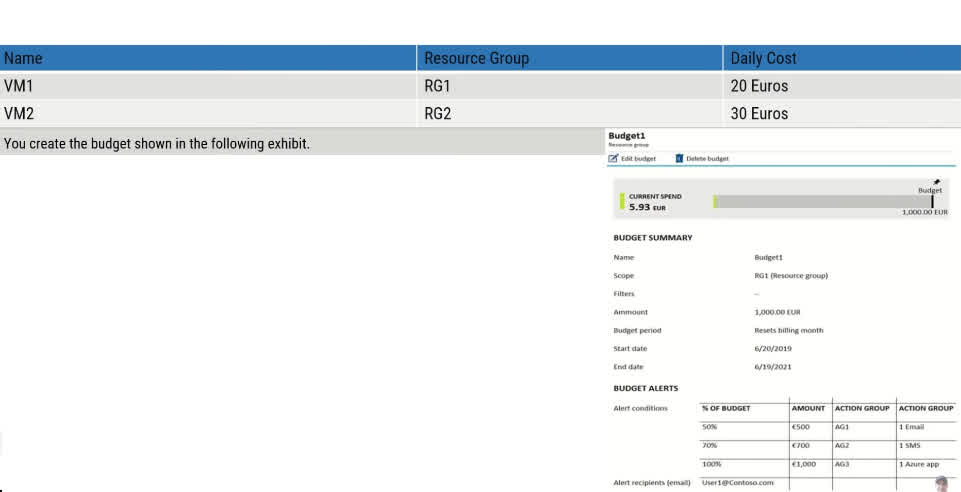

You have an Azure subscription. The subscription contains a storage account named storage1 with the lifecycle management rules shown in the following table. On June 1, you store a blob named File1 in the Hot access tier of storage1. What was the state of File 1 on June 7?

stored in the Cool access tier

stored in the Archive access tier

stored in the Hot access tier

deleted

Azure Storage Lifecycle Management rules help automate data tiering and deletion based on specified conditions Timeline of Events: June 1: You upload File1 to the Hot access tier. June 6: The blob has now existed for 5 days. June 7: The lifecycle rules are evaluated. How Rules are Applied: Rule 1 (Move to Cool Storage) applies after 5 days. Rule 2 (Delete the blob) applies after 5 days. Rule 3 (Move to Archive Storage) applies after 5 days. Since Rule 2 deletes the blob, deletion takes precedence over any movement to other tiers. Once a blob is deleted, it cannot be moved to Cool or Archive storage.

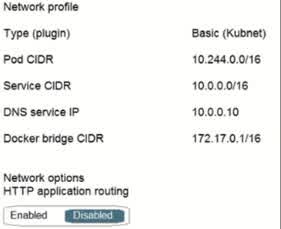

You have an Azure subscription that contains an Azure Active Directory (Azure AD) tenant named contoso.com and an Azure Kubernetes Service (AKS) cluster named AKS1. An administrator reports that she is unable to grant access to AKS1 to the users in contoso.com. You need to ensure that access to AKS1 can be granted to the contoso.com users. What should you do first?

From contoso.com, modify the Organization relationships settings.

From contoso.com, create an OAuth 2.0 authorization endpoint.

Recreate AKS1 .

From AKS1, create a namespace.

The OAuth 2.0 is the industry protocol for authorization. It allows a user to grant limited access to its protected resources. Designed to work specifically with Hypertext Transfer Protocol (HTTP), OAuth separates the role of the client from the resource owner. The client requests access to the resources controlled by the resource owner and hosted by the resource server. The resource server issues access tokens with the approval of the resource owner. The client uses the access tokens to access the protected resources hosted by the resource server.

You need to determine who deleted a network security group through Resource Manager. You are viewing the Activity Log when another Azure Administrator says you should use this event category to narrow your search. Choose the most suitable category.

Administrative

Service Health

Alert

Recommendation

Policy

Azure Activity Log provides a record of operations performed on Azure resources, including deletions, modifications, and creations. When investigating who deleted a Network Security Group (NSG), you need to look for management operations performed through Azure Resource Manager. Event Categories in the Activity Log: Administrative (? Correct) Tracks create, update, and delete operations on resources. Includes details such as who performed the action, when it happened, and the operation type. Since deleting an NSG is an administrative action, it will be recorded under this category. Service Health ? Reports issues with Azure services, such as outages or maintenance. Does not track resource deletions. Alert ? Logs when an Azure Monitor Alert is triggered based on predefined conditions. Does not capture deletion events. Recommendation ? Provides Azure Advisor recommendations for optimizing cost, security, and performance. Not related to deletion tracking. Policy ? Logs policy compliance and enforcement actions related to Azure Policy. Does not track direct resource deletions.

You have a registered DNS domain named contoso.com. You create a public Azure DNS zone named contoso.com. You need to ensure that records created in the contoso.com zone are resolvable from the internet. What should you do?

Create NS records in contoso.com.

Modify the SOA record in the DNS domain registrar.

Create the SOA record in contoso.com.

Modify the NS records in the DNS domain registrar.

To ensure that records created in the Azure DNS zone named contoso.com are resolvable from the internet, you need to delegate the domain to the Azure DNS name servers. When you create a public Azure DNS zone named contoso.com, Azure provides four name server (NS) records that are responsible for handling DNS queries for your domain. However, for these records to be used on the public internet, you must update them at your domain registrar (the service where you registered contoso.com). Steps to Ensure Public Resolution: Retrieve the NS records from Azure DNS: In the Azure Portal, navigate to your DNS zone (contoso.com). Locate the NS records, which point to Azure’s name servers. Update NS records at the Domain Registrar: Log in to your domain registrar (e.g., GoDaddy, Namecheap, or Azure Domains). Locate the NS (Name Server) settings for contoso.com. Replace the existing NS records with the Azure-provided NS records. Save the changes. Wait for DNS Propagation: Changes to NS records may take a few hours to propagate globally. Once propagated, any DNS queries for contoso.com will be resolved using Azure DNS. Why Not the Other Options? a) Create NS records in contoso.com ? The Azure DNS zone already has NS records by default. The problem is not about adding records inside Azure DNS, but rather delegating control at the domain registrar level. b) Modify the SOA record in the DNS domain registrar ? The SOA (Start of Authority) record defines the authoritative DNS server but does not control DNS delegation. Modifying the SOA record at the registrar does not redirect traffic to Azure DNS. c) Create the SOA record in contoso.com ? Azure DNS automatically generates an SOA record for your DNS zone. Manually creating another SOA record is unnecessary and does not enable resolution from the internet.

You have an Azure subscription that contains a web app named webapp1. You need to add a custom domain named www.contoso.com to webapp1. What should you do first?

Create a DNS record

Add a connection string

Upload a certificate

Stop webapp1

When adding a custom domain (www.contoso.com) to an Azure Web App (webapp1), Azure needs to verify ownership of the domain before it can be linked. This is done using DNS records. Steps to Add a Custom Domain to an Azure Web App: Create a DNS record (First Step – Required for Verification) Go to your DNS provider (domain registrar) (e.g., GoDaddy, Namecheap). Add a CNAME record that maps www.contoso.com to webapp1.azurewebsites.net. This tells DNS that requests for www.contoso.com should be handled by your Azure Web App. Add the Custom Domain in Azure In the Azure Portal, navigate to Web App ? Custom Domains. Click Add custom domain and enter www.contoso.com. Azure will verify the DNS record to confirm ownership. (Optional) Secure the Custom Domain with SSL If HTTPS is required, you must upload an SSL certificate (Step c, but this comes later). Azure provides App Service Managed Certificates if you don’t want to purchase one separately. Why Not the Other Options? b) Add a connection string ? Connection strings are used for database connections, not for setting up a domain. c) Upload a certificate ? A certificate is needed for HTTPS, but before that, the domain must first be added and verified. Certificates come after the domain is linked successfully. d) Stop webapp1 ? There is no need to stop the web app to add a custom domain. The process works while the app is running.

You sign up for Azure Active Directory (Azure AD) Premium. You need to add a user. named admin1@contoso.com as an administrator on all the computers that will be joined to the Azure AD domain. What should you configure in Azure AD?

Device settings from the Devices blade

Providers from the MFA Server blade

User settings from the Users blade

General settings from the Groups blade

When a computer is Azure AD joined, local administrator rights are not automatically assigned to all users. However, Azure AD allows you to configure who will be a local administrator on all devices joined to the domain. This setting is found in the Device settings section under the Devices blade in Azure Active Directory. Steps to Make admin1@contoso.com an Administrator on All Azure AD-Joined Devices: Go to the Azure AD Portal Navigate to Azure Active Directory in the Azure Portal. Go to the Devices Blade In the Azure AD menu, select Devices ? Device settings. Configure Additional Local Administrators Find the option: “Additional local administrators on Azure AD joined devices” Add the user admin1@contoso.com to this setting. Save the changes. Why Not the Other Options? b) Providers from the MFA Server blade ? The MFA Server blade is for multi-factor authentication settings and has nothing to do with device administration. c) User settings from the Users blade ? The Users blade is for managing individual users but does not control device-level permissions like local admin rights. d) General settings from the Groups blade ? The Groups blade is used for managing group memberships and roles, not device administration settings.

You have a deployment template named Template1 that is used to deploy 10 Azure web apps. You need to identify what to deploy before you deploy Template1. The solution must minimize Azure costs. What should you identify?

five Azure Application Gateways

one App Service plan

10 App Service plans

one Azure Traffic Manager

one Azure Application Gateway

In Azure, App Service Plans define the compute resources (CPU, memory, and storage) that host one or more web apps. Since you need to deploy 10 Azure web apps, the most cost-effective solution is to deploy them under a single App Service Plan, rather than creating 10 separate plans (which would increase costs). How App Service Plans Work: An App Service Plan determines pricing and scaling for web apps. Multiple web apps can share a single App Service Plan, meaning they share resources instead of being billed separately. If all 10 web apps are deployed under the same App Service Plan, you only pay for one set of resources instead of 10. Why Not the Other Options? a) Five Azure Application Gateways ? Application Gateway is a layer 7 load balancer for managing traffic, not required for deploying web apps. You don’t need five of them before deployment. c) 10 App Service plans ? This would create 10 separate compute environments, leading to unnecessary cost increases. A single App Service Plan can handle multiple web apps, reducing cost. d) One Azure Traffic Manager ? Traffic Manager is a DNS-based load balancer for global traffic distribution, which is useful for multi-region deployments but not required before deploying web apps. e) One Azure Application Gateway ? Application Gateway is for managing incoming traffic with WAF (Web Application Firewall) and SSL termination, but it’s not a prerequisite for deploying web apps.

Your company’s Azure subscription includes two Azure networks named VirtualNetworkA and VirtualNetworkB. VirtualNetworkA includes a VPN gateway that is configured to make use of static routing. Also, a site-to-site VPN connection exists between your company’s on-premises network and VirtualNetworkA. You have configured a point-to-site VPN connection to VirtualNetworkA from a workstation running Windows 10. After configuring virtual network peering between VirtualNetworkA and VirtualNetworkB, you confirm that you can access VirtualNetworkB from the company’s on-premises network. However, you find that you cannot establish a connection to VirtualNetworkB from the Windows 10 workstation. You have to make sure that a connection to VirtualNetworkB can be established from the Windows 10 workstation. Solution: You choose the Allow gateway transit setting on VirtualNetworkA. Does the solution meet the goal?

Yes

No

The issue is that while Virtual Network Peering allows communication between VirtualNetworkA and VirtualNetworkB, it does not automatically enable Point-to-Site (P2S) VPN clients to access the peered network (VirtualNetworkB). Why “Allow gateway transit” Does Not Solve the Problem? “Allow gateway transit” is used for VNet-to-VNet connections when one VNet has a VPN Gateway, and the other VNet (without a gateway) needs to use it for outbound traffic. This setting allows VirtualNetworkB to use the VPN Gateway in VirtualNetworkA for on-premises traffic. However, it does not apply to P2S VPN clients trying to connect to VirtualNetworkB. Why Can’t the Windows 10 Workstation Access VirtualNetworkB? When a P2S VPN client connects to VirtualNetworkA, by default, it can only access resources in VirtualNetworkA. Virtual Network Peering does not automatically enable P2S clients to access the peered network (VirtualNetworkB). The issue is that P2S routes do not propagate through VNet peering by default. Correct Solution to Meet the Goal: To allow Point-to-Site VPN clients to access VirtualNetworkB, you must: Enable “Use Remote Gateway” on VirtualNetworkB This allows VirtualNetworkB to send traffic through VirtualNetworkA’s VPN gateway. Configure Route Tables for P2S VPN Clients Add a custom route for the P2S VPN configuration so that it includes VirtualNetworkB’s address space. Modify P2S VPN Configuration Ensure that the VPN configuration includes VirtualNetworkB’s address space in the routing table.

Your company’s Azure subscription includes two Azure networks named VirtualNetworkA and VirtualNetworkB. VirtualNetworkA includes a VPN gateway that is configured to make use of static routing. Also, a site-to-site VPN connection exists between your company’s on-premises network and VirtualNetworkA. You have configured a point-to-site VPN connection to VirtualNetworkA from a workstation running Windows 10. After configuring virtual network peering between VirtualNetworkA and VirtualNetworkB, you confirm that you can access VirtualNetworkB from the company’s on-premises network. However, you find that you cannot establish a connection to VirtualNetworkB from the Windows 10 workstation. You have to make sure that a connection to VirtualNetworkB can be established from the Windows 10 workstation. Solution: You choose the Allow gateway transit setting on VirtualNetworkB. Does the solution meet the goal?

Yes

No

The issue is that Point-to-Site (P2S) VPN clients connected to VirtualNetworkA cannot automatically access VirtualNetworkB through virtual network peering. Simply enabling “Allow gateway transit” on VirtualNetworkB does not solve this issue because P2S VPN routes are not automatically propagated through VNet peering. Why “Allow gateway transit” on VirtualNetworkB Does Not Work? “Allow gateway transit” allows a VNet without a VPN gateway (VirtualNetworkB) to use a gateway in a peered VNet (VirtualNetworkA). This setting is only applicable to VNet-to-VNet connections, not Point-to-Site (P2S) VPN connections. The issue is that P2S VPN clients connected to VirtualNetworkA do not automatically inherit peering routes to VirtualNetworkB. Why Can’t the Windows 10 Workstation Access VirtualNetworkB? When a P2S VPN client connects to VirtualNetworkA, it can only access resources in VirtualNetworkA by default. Virtual network peering does not automatically allow P2S VPN traffic to flow to a peered network (VirtualNetworkB). P2S VPN routes are not automatically advertised to peered VNets unless explicitly configured. Correct Solution to Meet the Goal: To allow Point-to-Site VPN clients to access VirtualNetworkB, you must: Enable “Use Remote Gateway” on VirtualNetworkB This allows VirtualNetworkB to use VirtualNetworkA’s VPN gateway for traffic routing. Modify the P2S VPN Configuration Ensure that the VPN configuration includes VirtualNetworkB’s address space in the routing table. Manually Configure Route Tables (UDR – User Defined Routes) Add a custom route for the P2S VPN configuration so that it includes VirtualNetworkB’s address space. This ensures that P2S VPN clients know how to reach VirtualNetworkB.

Your company’s Azure subscription includes two Azure networks named VirtualNetworkA and VirtualNetworkB. VirtualNetworkA includes a VPN gateway that is configured to make use of static routing. Also, a site-to-site VPN connection exists between your company’s on-premises network and VirtualNetworkA. You have configured a point-to-site VPN connection to VirtualNetworkA from a workstation running Windows 10. After configuring virtual network peering between VirtualNetworkA and VirtualNetworkB, you confirm that you can access VirtualNetworkB from the company’s on-premises network. However, you find that you cannot establish a connection to VirtualNetworkB from the Windows 10 workstation. You have to make sure that a connection to VirtualNetworkB can be established from the Windows 10 workstation. Solution: You downloaded and reinstalled the VPN client configuration package on the Windows 10 workstation. Does the solution meet the goal?

Yes

No

When a Point-to-Site (P2S) VPN client connects to VirtualNetworkA, it follows the routing configuration provided in the VPN client configuration package. If VirtualNetworkB was not included in the original configuration, the VPN client will not know how to reach it. By re-downloading and reinstalling the VPN client configuration package, the client receives the updated routing information that includes VirtualNetworkB, allowing the workstation to establish a connection. Why Does This Work? VPN Configuration Packages Contain Route Information When a VPN client connects, it only knows how to route traffic based on the configuration package it was given at the time of download. If VirtualNetworkB was not originally included, the VPN client would not know how to send traffic there. Re-downloading the VPN Client Configuration Updates Routes When you enable virtual network peering and configure VirtualNetworkA to forward traffic, Azure updates the routing table. By reinstalling the updated VPN client, the Windows 10 workstation receives the new routes, allowing it to access VirtualNetworkB. Why Not Other Solutions? Simply enabling virtual network peering is not enough because P2S VPN clients do not automatically inherit peering routes. Manually configuring routes could work, but reinstalling the VPN client package is the simplest and most effective way to ensure the correct routes are applied.

You have an Azure subscription named Subscription 1. You plan to deploy a Ubuntu Server virtual machine named VM1 to Subscription1. You need to perform a custom deployment of the virtual machine. A specific trusted root certification authority (CA) must be added during the deployment. What should you do? To answer, select the appropriate options in the answer area. Tool to deploy Virtual Machine: NOTE: Each correct selection is worth one point.

New-AzureRmVm cmdlet

New-AzVM cmdlet

Create-AzVM cmdlet

az vm create command

Why Use az vm create? az vm create is a command from the Azure CLI, that is commonly used for deploying virtual machines (VMs) in Linux and Windows environments. It supports custom configurations such as adding a trusted root CA certificate during VM deployment using Cloud-init (which is ideal for Ubuntu VMs). The Azure CLI is a cross-platform tool, making it more flexible for automating Linux VM deployments. Why Not the Other Options? New-AzureRmVm cmdlet This cmdlet is from the AzureRM PowerShell module, which has been deprecated. It is not recommended for new deployments. New-AzVM cmdlet This is a valid PowerShell cmdlet for creating VMs, but PowerShell is more commonly used for Windows-based automation. For Linux VMs (like Ubuntu), Azure CLI (az vm create) is preferred because it integrates better with Cloud-init. Create-AzVM cmdlet This cmdlet does not exist. The correct PowerShell cmdlet for VM deployment is New-AzVM.

Your company wants to have some post-deployment configuration and automation tasks on Azure Virtual Machines. Solution: As an administrator, you suggested using ARM templates. Does the solution meet the goal?

Yes

No

Azure Resource Manager (ARM) templates are primarily used for infrastructure as code (IaC) to deploy and configure Azure resources. However, ARM templates are not well-suited for post-deployment configuration and automation tasks inside Virtual Machines (VMs). Why ARM Templates Are Not the Right Solution? ARM templates are declarative—they define what resources should be created, but they are not designed for post-deployment automation inside a VM. While ARM templates allow you to configure VM properties (e.g., networking, OS type, extensions), they lack advanced automation capabilities for tasks like installing software, configuring applications, or running scripts inside the VM after deployment. Correct Solution for Post-Deployment Configuration & Automation: To handle post-deployment automation inside Azure VMs, you should use one of the following: ? Azure Virtual Machine Extensions Use Custom Script Extension to run scripts inside the VM post-deployment. Install and configure software using PowerShell DSC (Desired State Configuration) or Chef/Puppet. ? Azure Automation & Runbooks Automate tasks using Azure Automation and Runbooks, which can execute scripts inside Azure VMs. ? Azure AutoManage If managing Windows/Linux VMs, AutoManage simplifies post-deployment configuration by applying best practices automatically. ? Azure DevOps Pipelines / GitHub Actions Use DevOps pipelines to trigger post-deployment scripts or Ansible playbooks.

Your company wants to have some post-deployment configuration and automation tasks on Azure Virtual Machines. Solution: As an administrator, you suggested using Virtual machine extensions. Does the solution meet the goal?

Yes

No

Azure Virtual Machine Extensions are the correct choice for post-deployment configuration and automation tasks on Azure Virtual Machines (VMs). These extensions allow administrators to execute scripts, install software, configure settings, and automate management tasks after the VM has been deployed. Why Virtual Machine Extensions Are the Right Solution? Designed for Post-Deployment Tasks VM extensions allow you to perform custom configurations, automation, and updates after a VM has been deployed. Supports Various Automation Tools Custom Script Extension: Runs PowerShell or Bash scripts for post-deployment configuration. Azure Desired State Configuration (DSC) Extension: Ensures that VMs remain predefined. Third-Party Tools: Integrates with tools like Chef, Puppet, or Ansible for configuration management. No Need for Manual Intervention Once a VM is deployed, VM extensions can be automatically applied, reducing the need for manual configurations. Examples of What VM Extensions Can Do: Install software (e.g., IIS, SQL Server, Apache, or custom applications). Configure firewall rules or security settings. Apply patches or updates after deployment. Deploy monitoring agents (Azure Monitor, Log Analytics, Microsoft Defender for Cloud). Why Other Solutions Like ARM Templates Are Not Enough? ARM templates can define VM properties but do not automate tasks inside the VM after deployment. Azure Automation is useful for broader automation but does not run inside the VM like extensions do.

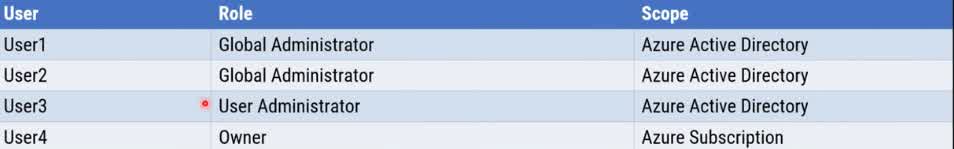

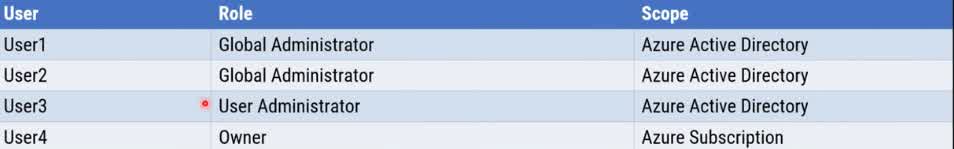

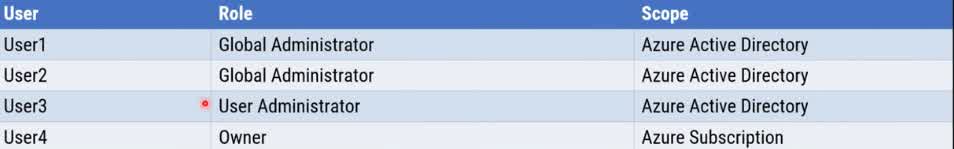

You have an Azure subscription that contains the following users in an Azure Active Directory tenant named contoso.onmicrosoft.com User1 creates a new Azure Active Directory tenant named external.contoso.onmicrosoft.com. You need to create new user accounts in external.contoso.onmicrosoft.com. Solution: You instruct User4 to create the user accounts. Does that meet the goal?

Yes

No

User4 has the Owner role at the Azure Subscription level, but not in Azure Active Directory (Azure AD). Managing users in Azure AD requires specific directory roles, such as Global Administrator or User Administrator. Why User4 Cannot Create User Accounts? Azure Subscription roles (e.g., Owner, Contributor) apply to resources within the subscription (such as VMs, storage, and networking). Azure AD roles (e.g., Global Administrator, User Administrator) apply to identity and user management. Since User4 is an Owner at the subscription level, they do not have any privileges to manage Azure AD users in external.contoso.onmicrosoft.com. Who Can Create Users in external.contoso.onmicrosoft.com? ? User1 (Global Administrator) ? Can create and manage users in external.contoso.onmicrosoft.com. ? User2 (Global Administrator) ? Can create and manage users. ? User3 (User Administrator) ? Can create and manage users.

You have an Azure subscription that contains the following users in an Azure Active Directory tenant named contoso.onmicrosoft.com User1 creates a new Azure Active Directory tenant named external.contoso.onmicrosoft.com. You need to create new user accounts in external.contoso.onmicrosoft.com. Solution: You instruct User3 to create the user accounts. Does that meet the goal?

Yes

No

User3 has the User Administrator role in the contoso.onmicrosoft.com Azure AD tenant. However, this role does not automatically grant permissions in the new tenant (external.contoso.onmicrosoft.com) that User1 created. Why User3 Cannot Create User Accounts? Azure AD roles are tenant-specific. User3’s User Administrator role applies only to contoso.onmicrosoft.com, not to external.contoso.onmicrosoft.com. Since the new tenant (external.contoso.onmicrosoft.com) is a separate directory, User3 does not have any assigned roles there by default. Only users with appropriate roles in the new tenant can create users. When User1 created external.contoso.onmicrosoft.com, they became a Global Administrator of that new tenant. Other users from contoso.onmicrosoft.com do not automatically get any roles in the new tenant. Who Can Create Users in external.contoso.onmicrosoft.com? ? User1 (Global Administrator in external.contoso.onmicrosoft.com) ? Can create users. ? User2 (If assigned Global Administrator in the new tenant) ? Can create users.

You have an Azure subscription that contains the following users in an Azure Active Directory tenant named contoso.onmicrosoft.com User1 creates a new Azure Active Directory tenant named external.contoso.onmicrosoft.com. You need to create new user accounts in external.contoso.onmicrosoft.com. Solution: You instruct User2 to create the user accounts. Does that meet the goal?

Yes

No

User2 has the Global Administrator role in the contoso.onmicrosoft.com Azure AD tenant. However, this role does not automatically apply to the new tenant (external.contoso.onmicrosoft.com) that User1 created. Why User2 Cannot Create User Accounts? Azure AD roles are tenant-specific. Being a Global Administrator in contoso.onmicrosoft.com does not grant any permissions in external.contoso.onmicrosoft.com. Since external.contoso.onmicrosoft.com is a separate Azure AD tenant, User2 does not have any administrative privileges there by default. Who Gets Admin Rights in the New Tenant? The user who creates a new tenant (User1) automatically becomes a Global Administrator in that new tenant. Other users from the original tenant (contoso.onmicrosoft.com) do not get any roles in the new tenant unless explicitly assigned. Who Can Create Users in external.contoso.onmicrosoft.com? ? User1 (Global Administrator in external.contoso.onmicrosoft.com) ? Can create users. Correct Solution: To allow User2 to create user accounts, User1 must first add User2 as a Global Administrator in external.contoso.onmicrosoft.com.

You have an Azure subscription that contains the following users in an Azure Active Directory tenant named contoso.onmicrosoft.com User1 creates a new Azure Active Directory tenant named external.contoso.onmicrosoft.com. You need to create new user accounts in external.contoso.onmicrosoft.com. Solution: You instruct User1 to create the user accounts. Does that meet the goal?

Yes

No

When User1 creates the new Azure Active Directory (Azure AD) tenant external.contoso.onmicrosoft.com, they automatically become a Global Administrator for that new tenant. Why Can User1 Create User Accounts? The Creator of a New Azure AD Tenant Becomes a Global Administrator In Azure AD, the user who creates a new tenant is automatically assigned the Global Administrator role in that tenant. Since User1 created external.contoso.onmicrosoft.com, they have full administrative control, including user management. Global Administrator Can Create and Manage Users The Global Administrator role has full control over Azure AD, including the ability to: Create, modify, and delete users. Assign roles to users. Manage groups and directory settings. Who Else Can Create Users in external.contoso.onmicrosoft.com? ? User1 (Global Administrator in the new tenant) ? Can create users. ? Any other user assigned Global Administrator or User Administrator in external.contoso.onmicrosoft.com. Why Other Users From contoso.onmicrosoft.com Cannot Create Users? User2 (Global Administrator in contoso.onmicrosoft.com) ? No permissions in the new tenant unless assigned. User3 (User Administrator in contoso.onmicrosoft.com) ? No permissions in the new tenant unless assigned. User4 (Owner of Azure Subscription) ? Azure Subscription roles do not grant permissions in Azure AD.

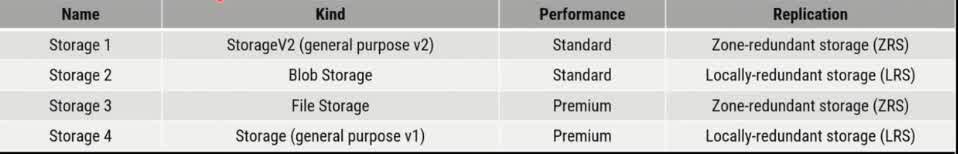

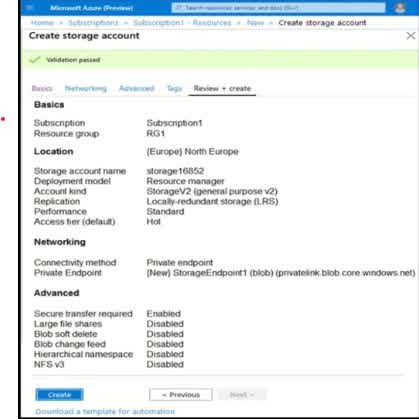

You create an Azure Storage account. You plan to add 10 blob containers to the storage account. You need to use a different key for one of the containers to encrypt data at rest. What should you do before you create the container?

Generate a shared access signature (SAS)

Modify the minimum TLS version

Rotate the access keys

Create an encryption scope

Azure Storage automatically encrypts data at rest using Microsoft-managed keys by default. However, if you need to encrypt data in a specific blob container using a different key (such as a customer-managed key stored in Azure Key Vault), you must first create an encryption scope. An encryption scope allows you to define a unique encryption configuration within a storage account. Each blob container in the storage account can be assigned a different encryption scope, enabling you to use different keys for different containers. Steps: Create an encryption scope in the Azure Storage account. You can choose to use a Microsoft-managed key or a customer-managed key (CMK). Specify the encryption scope when creating the blob container. Any blobs added to that container will be encrypted using the specified encryption scope and key. Why not the other options? (a) Generate a shared access signature (SAS) A SAS token provides secure, limited-time access to resources but does not control encryption at rest. It is used for authentication and authorization, not encryption. (b) Modify the minimum TLS version Changing the TLS version affects transport security, not data encryption at rest. TLS is used to secure data in transit. (c) Rotate the access keys Rotating access keys helps improve security by refreshing authentication credentials but does not allow you to use a different encryption key for a specific container.

You have an Azure Active Directory (Azure AD) tenant named contosocloud.onmicrosoft.com. Your company has a public DNS zone for contoso.com. You add contoso.com as a custom domain name to Azure AD. You need to ensure that Azure can verify the domain name. Which type of DNS record should you create?

MX

NSEC

PTR

RRSIG

When you add a custom domain (e.g., contoso.com) to Azure Active Directory (Azure AD), you must verify domain ownership. Azure AD provides a verification code that you must add as a DNS record in your domain’s public DNS zone. To verify the domain, Azure AD supports adding either an MX record or a TXT record. While TXT records are commonly used, MX records are also a valid option. Why use an MX record? An MX (Mail Exchange) record is used for routing emails, but Azure AD allows it for domain verification purposes. Azure AD provides an MX record value (e.g., xxxxxxxxx.msv1.invalid) that you must add to your DNS provider. Once the MX record is propagated, Azure AD can verify the domain. No email functionality is affected because the provided MX record is not a functional mail server—it is only for verification. Why not the other options? (b) NSEC (Next Secure Record) Used in DNSSEC (Domain Name System Security Extensions) to prevent DNS spoofing, but not related to domain verification. (c) PTR (Pointer Record) Used for reverse DNS lookups (mapping an IP address to a domain), but not for verifying domain ownership. (d) RRSIG (Resource Record Signature) A DNSSEC record used to ensure integrity and authenticity of DNS data but does not help in domain verification.

You have an Azure subscription that contains an Azure Active Directory (Azure AD) tenant named contoso.com and an Azure Kubernetes Service (AKS) cluster named AKS1. An administrator reports that she is unable to grant access to AKS1 to the users in contoso.com. You need to ensure that access to AKS1 can be granted to the contoso.com users. What should you do first?

From contoso.com, modify the Organization relationships settings

From contoso.com, create an OAuth 2.0 authorization endpoint

Recreate AKS1

From AKS1, create a namespace

Azure Kubernetes Service (AKS) can integrate with Azure Active Directory (Azure AD) to enable user authentication and access control. If an administrator is unable to grant access to AKS1 for users in contoso.com, it is likely because AKS1 is not properly configured to authenticate users via Azure AD. To fix this, the first step is to ensure that an OAuth 2.0 authorization endpoint is created in Azure AD. This endpoint allows Azure AD to authenticate users and authorize access to AKS. Why is an OAuth 2.0 authorization endpoint needed? AKS uses Azure AD-based authentication to manage user access. OAuth 2.0 is the standard protocol used for authentication and authorization in Azure AD. The OAuth 2.0 authorization endpoint is required for AKS to verify user identities and enforce role-based access control (RBAC). Without this endpoint, Azure AD cannot issue tokens to users for authentication to AKS. Why Not the Other Options? “From contoso.com, modify the Organization relationships settings” This setting is used for B2B (Business-to-Business) collaboration and external identity management, not for AKS authentication. Since contoso.com is the same tenant, modifying this setting will not help in granting AKS access. “Recreate AKS1” While AKS must be configured with Azure AD integration during creation, recreating the cluster is not necessary to resolve this issue. The missing authentication component can be added without recreating AKS1. “From AKS1, create a namespace” A namespace in Kubernetes is used for organizing workloads and does not affect authentication. It does not control who can access the cluster—RBAC and Azure AD do.

You create an Azure Storage account named storage1. You plan to create a file share named datal. Users need to map 1 drive to the data file share from home computers that run Windows 10. Which outbound port should you open between the home computers and the data file share?

80

443

445

3389

* Port 0: HTTP, this is for web + Port 443: HTTPS, for web too + Port 445, as this is the port for SMB protocol to share files + Port 3389: Remote desktop protocol (RDP)

Your company has an Azure Active Directory (Azure AD) tenant named weyland.com that is configured for hybrid coexistence with the on-premises Active Directory domain. You have a server named DirSync1 that is configured as a DirSync server. You create a new user account in the on-premises Active Directory. You now need to replicate the user information to Azure AD immediately. Solution: You use Active Directory Sites and Services to force replication of the Global Catalog on a domain controller. Does the solution meet the goal?

Yes

No

The problem requires forcing an immediate synchronization of a newly created on-premises Active Directory (AD) user to Azure AD. However, the proposed solution—using Active Directory Sites and Services to force replication of the Global Catalog on a domain controller—only replicates data within on-premises domain controllers. It does not trigger synchronization to Azure AD. Why is the proposed solution incorrect? Active Directory Sites and Services is used to manage replication between domain controllers (DCs) in an on-premises AD environment. Forcing replication of the Global Catalog (GC) only ensures that changes are propagated among domain controllers within the on-premises infrastructure. However, Azure AD Connect (DirSync) is responsible for syncing changes from on-premises AD to Azure AD. Simply forcing replication between DCs does not push the changes to Azure AD.

Your company has an Azure Active Directory (Azure AD) tenant named weyland.com that is configured for hybrid coexistence with the on-premises Active Directory domain. You have a server named DirSync1 that is configured as a DirSync server. You create a new user account in the on-premises Active Directory. You now need to replicate the user information to Azure AD immediately. Solution: You run the Start-ADSyncSyncCycle -Policy Type Initial PowerShell cmdlet. Does the solution meet the goal?

Yes

No

The goal is to replicate the newly created user account from on-premises Active Directory (AD) to Azure AD immediately. The proposed solution suggests running the following PowerShell command: Start-ADSyncSyncCycle -PolicyType Initial While this does trigger synchronization, it is not the most efficient option because: “Initial” sync performs a full synchronization, which includes all objects in AD, not just the recent changes. A full sync is slower and more resource-intensive than necessary. Since we only need to sync the newly created user, a delta sync is more appropriate. Instead of an initial sync, the best approach is to run a delta sync, which synchronizes only the recent changes (e.g., newly added users): Start-ADSyncSyncCycle -PolicyType Delta ? Delta sync is faster and syncs only the recent changes, ensuring that the new user appears in Azure AD without affecting other objects. ? Initial sync should only be used if there is a major configuration change or if Azure AD Connect is being set up for the first time.

Your company has an Azure Active Directory (Azure AD) tenant named weyland.com that is configured for hybrid coexistence with the on-premises Active Directory domain. You have a server named DirSync1 that is configured as a DirSync server. You: create a new user account in the on-premise Active Directory. You now need to replicate the user information to Azure AD immediately. Solution: You run the Start-ADSyncSyncCycle -Bolicy Type Delta PowerShell cmdlet. Does the solution meet the goal?

Yes

No

The goal is to immediately synchronize a newly created user account from on-premises Active Directory (AD) to Azure AD. The proposed solution runs the following PowerShell command: Start-ADSyncSyncCycle -PolicyType Delta This successfully meets the requirement because: “Delta” synchronization only syncs the changes (new users, modified attributes, deletions, etc.) instead of performing a full synchronization. It is fast and efficient, ensuring that the newly created user is replicated to Azure AD immediately. It avoids unnecessary processing compared to an “Initial” sync, which would resync all objects. Why This Works? Azure AD Connect (DirSync) is responsible for synchronizing on-premises AD objects to Azure AD. By default, synchronization happens every 30 minutes. The Start-ADSyncSyncCycle -PolicyType Delta command forces an immediate sync of only recent changes instead of waiting for the next scheduled sync.

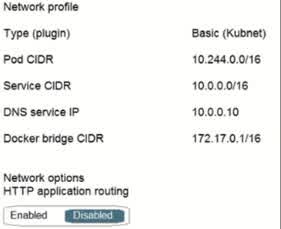

You have an Azure subscription. In the Azure portal, you plan to create a storage account named storage that will have the following settings: «Performance: Standard «Replication: Zone-redundant storage (ZRS) «Access tier (default): Cool «Hierarchical namespace: Disabled You need to ensure that you can set Account kind for storage1 to Block BlobStorage. Which setting should you modify first?

Performance

Replication

Access tier (default)

Hierarchical namespace

The Account Kind of an Azure Storage account determines the type of data it can store and how it operates. If you want to set the Account Kind to BlockBlobStorage, you must first ensure that the Performance setting is set to Premium. Why? BlockBlobStorage accounts are designed specifically for high-performance workloads using block blobs. BlockBlobStorage accounts require the Performance setting to be set to “Premium.” The default Standard performance setting is only available for General-purpose v2 (GPv2) accounts and not for BlockBlobStorage accounts. Why Not the Other Options? ? (b) Replication (ZRS) Replication type (LRS, ZRS, GRS, etc.) affects data redundancy but does not impact the ability to select BlockBlobStorage as the account kind. ? (c) Access tier (Cool) Access tiers (Hot, Cool, Archive) determine how frequently data is accessed but do not affect the account kind. BlockBlobStorage accounts only support the Hot and Cool tiers, but changing this setting alone would not allow you to select BlockBlobStorage. ? (d) Hierarchical namespace Hierarchical namespace is required for Azure Data Lake Storage (ADLS) but is unrelated to BlockBlobStorage. BlockBlobStorage accounts do not support hierarchical namespaces.

You administer a solution in Azure that is currently having performance issues. You need to find the cause of the performance issues about metrics on the Azure infrastructure. Which of the following is the tool you should use?

Azure Traffic Analytics

Azure Monitor

Azure Activity Log

Azure Advisor

When diagnosing performance issues in an Azure solution, you need a tool that provides real-time and historical performance metrics for Azure infrastructure (such as CPU, memory, disk I/O, and network usage). ? Azure Monitor is the best choice because: It collects, analyzes, and visualizes performance metrics from Azure resources (VMs, databases, networking, applications, etc.). It provides real-time monitoring and alerting to detect performance bottlenecks. It integrates with Log Analytics and Application Insights to correlate system and application-level issues. It includes Azure Metrics Explorer to analyze CPU, memory, and network performance trends over time. Why Not the Other Options? ? (a) Azure Traffic Analytics Focuses on network traffic analysis from Azure Network Watcher. Helps detect DDoS attacks and network anomalies, but does not analyze infrastructure metrics like CPU or memory usage. ? (c) Azure Activity Log Tracks administrative and security-related events (e.g., resource creation, deletion, and role assignments). Does not provide real-time performance metrics. ? (d) Azure Advisor Provides best practice recommendations to improve security, performance, and cost-efficiency. Does not offer detailed infrastructure monitoring or real-time performance insights.

You have an Azure subscription named Subscription1. Subscription contains a resource group named RG1. RG1 contains resources that were deployed by using templates. You need to view the date and time when the resources were created in RG1. Solution: From the Subscriptions blade, you select the subscription, and then click Programmatic deployment. Does the solution meet the goal?

Yes

No

The goal is to view the date and time when resources were created in Resource Group RG1. The proposed solution suggests navigating to Programmatic Deployment from the Subscriptions blade, but this will not provide the required creation timestamps. Why is this solution incorrect? The Programmatic deployment section in Azure only provides deployment options (such as ARM templates, Bicep, or Terraform). It does not show historical deployment details or resource creation timestamps. The correct place to find resource creation timestamps is in the Activity Log or Deployments section of RG1. Correct Approach to View Resource Creation Date & Time: 1?? Using Activity Log (Best Method) Go to Azure Portal ? Navigate to RG1 (Resource Group). Select Activity Log ? Filter by “Deployment” events to see when resources were created. This log contains timestamps and details of deployments, including which resources were deployed and by whom. 2?? Using the Deployments Section in RG1 Go to Azure Portal ? RG1 ? Deployments. This section shows the history of ARM template deployments, including timestamps. 3?? Using Azure Resource Graph Explorer (for advanced queries) You can run queries to check when each resource was created using Azure Resource Graph. Why Not the Other Options? ? Programmatic Deployment does not contain resource creation timestamps. ? Activity Log or Deployments section in RG1 is the correct way to get this information.

You have an Azure subscription named Subscription1. Subscription contains a resource group named RG1. RG1 contains resources that were deployed by using templates. You need to view the date and time when the resources were created in RG1. Solution: From the Subscriptions blade, you select the subscription, and then click Resource providers. Does the solution meet the goal?

Yes

No

The goal is to view the date and time when resources were created in Resource Group RG1. The proposed solution suggests going to the Subscriptions blade ? Selecting the subscription ? Clicking Resource Providers. However, this does not provide resource creation timestamps. Why is this solution incorrect? Resource Providers in Azure manage different resource types (e.g., Microsoft.Compute for VMs, Microsoft.Storage for storage accounts). This section only registers and manages resource providers, but does not show deployment history or timestamps. It does not track when resources were created. Correct Approach to View Resource Creation Date & Time: ? Method 1: Use the Activity Log (Best Method) Go to the Azure Portal ? Navigate to RG1. Click on Activity Log. Apply a filter for “Deployment” events. This will show a timestamp of when each resource was created. ? Method 2: Check the Deployments Section in RG1 Go to RG1 ? Click on Deployments. This will show ARM template deployments, including timestamps of when resources were provisioned. ? Method 3: Use Azure Resource Graph (Advanced Queries) You can query Azure Resource Graph Explorer to find resource creation timestamps programmatically. Why Not Resource Providers? ? Resource Providers do not store or display resource creation timestamps. ? The correct way to check timestamps is through the Activity Log or Deployments section in RG1.

You have an Azure subscription named Subscription. Subscription contains a resource group named RG1. RG1 contains resources that were deployed by using templates. You need to view the date and time when the resources were created in RG1. Solution: From the RG1 blade, you click Automation script. Does the solution meet the goal?

Yes

No

The goal is to view the date and time when resources were created in Resource Group RG1. The proposed solution suggests navigating to RG1 and clicking Automation Script, but this does not provide the required resource creation timestamps. Why is this solution incorrect? The Automation Script feature in Azure generates an ARM template for the existing resource group. This template includes the current configuration of the resources but does not show timestamps of when they were created. It is used for redeploying resources, not for tracking their creation history. Correct Approach to View Resource Creation Date & Time: ? Method 1: Use the Activity Log (Best Method) Go to the Azure Portal ? Navigate to RG1. Click on Activity Log. Apply a filter for “Deployment” events. This will show timestamps of when each resource was created. ? Method 2: Check the Deployments Section in RG1 Go to RG1 ? Click on Deployments. This will show ARM template deployments, including timestamps of when resources were provisioned. ? Method 3: Use Azure Resource Graph (Advanced Queries) You can query Azure Resource Graph Explorer to find resource creation timestamps programmatically. Why Not Automation Script? ? Automation Script only generates a template for existing resources and does not track creation timestamps. ? The correct way to find resource creation time is via the Activity Log or Deployments section in RG1.

You have an Azure subscription named Subscription. The Subscription contains a resource group named RG1. RG1 contains resources that were deployed by using templates. You need to view the date and time when the resources were created in RG1. Solution: From the RG1 blade, you click Deployments. Does the solution meet the goal?

Yes

No

The goal is to view the date and time when the resources were created in Resource Group RG1. The proposed solution suggests navigating to RG1 and clicking Deployments. This solution is correct because: The Deployments section in RG1 provides a history of all ARM template deployments, including: The date and time of deployment The resources created during each deployment The status of each deployment Since RG1 was deployed using templates, the Deployments blade accurately tracks when resources were created. How to Check Deployment History in Azure Portal: Go to Azure Portal ? Navigate to RG1. Click on Deployments in the left menu. You will see a list of past deployments along with their timestamps. Click on a deployment to view details, including which resources were created and when. Alternative Ways to Check Resource Creation Timestamps: ? Method 1: Use the Activity Log (Another Valid Approach) Activity Log captures deployment events, including timestamps of when resources were created. Navigate to RG1 ? Activity Log, then filter for “Deployment” events. ? Method 2: Use Azure Resource Graph (Advanced Queries) Run queries in Azure Resource Graph Explorer to retrieve resource creation timestamps programmatically. Why Does This Solution Work? ? The Deployments blade stores a history of template-based resource deployments, including creation timestamps. ? Since RG1 was deployed using templates, this is the most direct and correct way to find the resource creation dates.

The team for a delivery company is configuring a virtual machine scale set. Friday night is typically the busiest time. Conversely, 8 AM on Tuesday is generally the quietest time. Which of the following virtual machine scale set features should be configured to add more machines during that time?

Autoscale

Metric-based rules

Schedule-based rules

A Virtual Machine Scale Set (VMSS) allows you to automatically scale the number of virtual machines (VMs) based on demand or a predefined schedule. Since the company experiences predictable variations in demand—with Friday night being the busiest and Tuesday morning being the quietest—the best approach is to configure Schedule-based rules. ? Schedule-based rules allow you to: Predefine scaling actions based on time and day (e.g., increase VM instances on Friday nights, decrease on Tuesday mornings). Ensure that additional VMs are available before peak demand occurs, preventing performance issues. Optimize costs by reducing VM instances when demand is low. Why Not the Other Options? ? (a) Autoscale “Autoscale” is a general term for dynamically increasing or decreasing VM instances based on demand. However, autoscale by itself does not specify whether it is based on time or system metrics. ? (b) Metric-based rules These rules reactively adjust the number of VMs based on real-time metrics (e.g., CPU usage, memory utilization). They do not account for predictable demand spikes ahead of time, making them less effective for scheduled workloads.

Your company has an Azure Active Directory (Azure AD) subscription. You need to deploy five virtual machines (VMs) to your company’s virtual network subnet. The VMs will each have both a public and private IP address. Inbound and outbound security rules for all of these virtual machines must be identical. Which of the following is the least amount of network interfaces needed for this configuration?

5

10

20

40

The least amount of network interfaces needed for this configuration is one network interface per VM. Each virtual machine (UM) in Azure requires at least one network interface. Each Azure Virtual Machine (VM) requires at least one network interface (NIC) to connect to the Virtual Network (VNet). Since the requirement states: Each VM must have both a public and private IP address. All VMs will have identical inbound and outbound security rules. In Azure, a single NIC can have both a public and private IP address assigned to it. Thus, the least number of network interfaces (NICs) needed is one per VM, which means: 5 VMs × 1 NIC per VM = 5 NICs 5 VMs×1 NIC per VM=5 NICs Why Not the Other Options? ? (b) 10 (2 NICs per VM) This would be necessary only if each VM required multiple NICs for separate traffic flows. Since each NIC can have both a public and private IP, two NICs per VM are not required. ? (c) 20 (4 NICs per VM) & (d) 40 (8 NICs per VM) Azure allows multiple NICs per VM for advanced networking needs (e.g., network appliances, multi-subnet routing), but it is unnecessary in this scenario.

Your company has an Azure Active Directory (Azure AD) subscription. You need to deploy five virtual machines (VMs) to your company’s virtual network subnet. The VMs will each have both a public and private IP address. Inbound and Outbound security rules for all of these virtual machines must be identical. Which of the following is the least amount of security groups needed for this configuration?

4

3

2

1

A Network Security Group (NSG) is used in Azure to control inbound and outbound traffic to resources within a virtual network (VNet) by defining security rules. In this scenario, we need to: Deploy five virtual machines (VMs) in a virtual network subnet. Assign both public and private IP addresses to each VM. Ensure identical inbound and outbound security rules apply to all five VMs. ? Since all five VMs require the same security rules, a single NSG is sufficient.

You have an Azure virtual machine named VM1 that runs Windows Server 2016. You need to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. Solution: You create an event subscription on VM1. You create an alert in Azure Monitor and specify VM1 as the source. Does the solution meet the goal?

Yes

No

The goal is to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. The proposed solution suggests: Creating an event subscription on VM1. Creating an alert in Azure Monitor and specifying VM1 as the source. ? Why Doesn’t This Solution Work? An event subscription is typically used for event-driven automation (e.g., using Event Grid for notifications), not for monitoring logs and triggering alerts. Azure Monitor alerts require Log Analytics or Performance Counters to track event logs, which this approach does not include. Simply specifying VM1 as the source in Azure Monitor does not automatically track System event logs. Correct Approach: ? To achieve the goal, the correct solution should involve Azure Monitor and Log Analytics, using the following steps: Enable Log Analytics Agent on VM1 to collect System event logs. Configure Log Analytics Workspace to collect Event Logs: Go to Azure Monitor ? Log Analytics Workspace ? Advanced Settings ? Data ? Windows Event Logs. Add System and set the level to Error. Create an Azure Monitor Alert Rule: Go to Azure Monitor ? Alerts. Define a Log-based alert that triggers when more than two error events occur within an hour. Use Kusto Query Language (KQL) in Log Analytics to filter events from the System Event Log.

You have an Azure virtual machine named VM1 that runs Windows Server 2016. You need to create an alert in Azure when more than two error events are logged to the System event log on VMI within an hour. Solution: You create an Azure Log Analytics workspace and configure the data settings. You add the Microsoft Monitoring Agent VM extension to VM1. You create an alert in Azure Monitor and specify the Log Analytics workspace as the source. Does the solution meet the goal?

Yes

No

The goal is to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. The proposed solution suggests: Creating an Azure Log Analytics workspace and configuring data settings. Adding the Microsoft Monitoring Agent (MMA) VM extension to VM1. Creating an alert in Azure Monitor and specifying the Log Analytics workspace as the source. ? What This Solution Does Correctly: Log Analytics is required to collect Windows Event Logs from VM1. The Microsoft Monitoring Agent (MMA) is needed to send VM1’s logs to Log Analytics. ? Why Doesn’t This Solution Fully Meet the Goal? The solution is missing the log query for the alert. Simply adding the agent and workspace does not automatically trigger alerts; you must create a log query-based alert in Azure Monitor. The solution does not mention configuring a Kusto Query (KQL) to check for more than two error events in an hour.

You have an Azure virtual machine named VM1 that runs Windows Server 2016. You need to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. Solution: You create an Azure Log Analytics workspace and configure the data settings. You install the Microsoft Monitoring Agent on VM1. You create an alert in Azure Monitor and specify the Log Analytics workspace as the source. Does the solution meet the goal?

Yes

No

The goal is to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. The proposed solution suggests: Creating an Azure Log Analytics workspace and configuring data settings. Installing the Microsoft Monitoring Agent (MMA) on VM1. Creating an alert in Azure Monitor and specifying the Log Analytics workspace as the source. ? Why This Solution Meets the Goal: Log Analytics workspace is necessary to store and analyze event log data. The Microsoft Monitoring Agent (MMA) is required to send VM1’s event logs to Azure Log Analytics. Azure Monitor can be used to create alerts based on data stored in the Log Analytics workspace. Once logs are collected, you can configure an alert rule in Azure Monitor using a Kusto Query Language (KQL) query to check for more than two error events in the last hour.

You have an Azure virtual machine named VM1 that runs Windows Server 2016. You need to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. Solution: You create an Azure storage account and configure shared access signatures (SASs). You install the Microsoft Monitoring Agent on VM1. You create an alert in Azure Monitor and specify the storage account as the source. Does the solution meet the goal?

Yes

No

The goal is to create an alert in Azure when more than two error events are logged to the System event log on VM1 within an hour. The proposed solution suggests: Creating an Azure storage account and configuring shared access signatures (SASs). Installing the Microsoft Monitoring Agent (MMA) on VM1. Creating an alert in Azure Monitor and specifying the storage account as the source. ? Why This Solution Does NOT Meet the Goal: Azure Storage accounts are not used for event log monitoring. Storage accounts store data such as blobs, files, and tables. They do not store Windows event logs from VM1 for Azure Monitor to analyze. Shared Access Signatures (SASs) are irrelevant here. SAS is used to grant temporary access to Azure Storage data, not for monitoring system logs. Azure Monitor cannot use a storage account as a source for event log alerts. To monitor Windows event logs, Azure Monitor must use Log Analytics Workspace, not a storage account.

You have an Azure virtual machine named VM1. VM1 was deployed by using a custom Azure Resource Manager template named ARM1.json. You receive a notification that VM1 will be affected by maintenance. You need to move VM1 to a different host immediately. Solution: From the Overview blade, you move the virtual machine to a different subscription. Does the solution meet the goal?

Yes

No

The goal is to move VM1 to a different host immediately to avoid maintenance impact. The proposed solution suggests: Moving the virtual machine (VM1) to a different subscription from the Overview blade in the Azure portal. ? Why This Solution Does NOT Meet the Goal: Moving a VM to a different subscription does not change its physical host. Subscription changes affect billing and access control, not the VM’s physical infrastructure. The VM remains in the same Azure region and physical datacenter, meaning it will still be affected by maintenance. To move the VM to a different host, you need to redeploy it. Redeploying a VM assigns it to a new physical host in the same region.

You have an Azure virtual machine named VM1. VM1 was deployed by using a custom Azure Resource Manager template named ARM1.json. You receive a notification that VM1 will be affected by maintenance. You need to move VM1 to a different host immediately. Solution: From the Redeploy blade, you click Redeploy. Does the solution meet the goal?

Yes

No

The goal is to move VM1 to a different host immediately because of an upcoming maintenance event. The proposed solution suggests: Navigating to the Redeploy blade in the Azure portal. Clicking Redeploy to move the VM to a new host. ? Why This Solution Meets the Goal: Redeploying a VM forces Azure to move it to a new physical host within the same region. This action preserves the VM’s data, configuration, and IP addresses, ensuring minimal disruption. Azure deallocates the VM, moves it to a new host, and powers it back on.

You have an Azure virtual machine named VM1. VM1 was deployed by using a custom Azure Resource Manager template named ARM1.json. You receive a notification that VM1 will be affected by maintenance. You need to move VM1 to a different host immediately. Solution: From the Update management blade, you click Enable. Does the solution meet the goal?

Yes

No

The goal is to move VM1 to a different host immediately because of an upcoming maintenance event. The proposed solution suggests: Going to the Update Management blade Clicking “Enable” ? Why This Solution Does NOT Meet the Goal: Update Management is used for patching and compliance, not for moving VMs. It helps automate patch deployment and track update compliance. It does NOT affect the VM’s host placement. To move a VM to a different host, the correct action is to Redeploy the VM. Redeploying forces Azure to deallocate and move the VM to a new physical host. The “Enable” button in Update Management does not achieve this. Correct Solution: ? Use the “Redeploy” option To move a VM to a new host, follow these steps: Azure Portal: Go to Azure Portal ? VM1 In the left-hand menu, select Redeploy Click Redeploy PowerShell Command: Set-AzVM -ResourceGroupName “RG1” -Name “VM1” -Redeploy Azure CLI Command: az vm redeploy –resource-group RG1 –name VM1

Your company has serval departments. Each department has several virtual machines (VMs). The company has an Azure subscription that contains a resource group named RG1. All VMs are located in RG1. You want to associate each VM with its respective department. What should you do?

Create Azure Management Groups for each department

Create a resource group for each department

Assign tags to the virtual machines

Modify the settings of the virtual machines

Tags in Azure allow you to categorize and organize resources like virtual machines (VMs) by assigning key-value pairs. Since all VMs are in the same resource group (RG1), using tags is the best way to associate each VM with its respective department. Why Not the Other Options? “Create Azure Management Groups for each department.” Azure Management Groups are used for governing multiple subscriptions, not for organizing VMs within a single subscription. Since all VMs are already in RG1, management groups are not needed. “Create a resource group for each department.” While creating separate resource groups could help in some cases, all VMs are already in RG1. Moving VMs to new resource groups requires reorganization and may impact access control and policies. Tags are a simpler and more flexible approach. “Modify the settings of the virtual machines.” VM settings control compute, network, and storage configurations, but they do not help categorize resources by department. Modifying VM settings is unnecessary for tagging.

You have an Azure subscription that contains an Azure Active Directory (Azure AD) tenant named contoso.com and an Azure Kubernetes Service (AKS) cluster named AKS1.An administrator reports that she is unable to grant access to AKS1 to the users in contoso.com. You need to ensure that access to AKS1 can be granted to the contoso.com users. What should you do first?

From contoso.com, modify the Organization relationships settings

From contoso.com, create an OAuth 2.0 authorization endpoint

Recreate AKS1

From AKS1, create a namespace

Azure Kubernetes Service (AKS) integrates with Azure Active Directory (Azure AD) to manage user authentication and access to the Kubernetes API server. If an administrator is unable to grant access to AKS1, it is likely because Azure AD integration is not correctly configured. One of the key requirements for Azure AD authentication in AKS is to have an OAuth 2.0 authorization endpoint configured in the Azure AD tenant (contoso.com). This endpoint is needed for token-based authentication, allowing users from contoso.com to authenticate and interact with the AKS cluster. When you create the OAuth 2.0 authorization endpoint, it enables AKS to use Azure AD for authentication, making it possible to assign RBAC (Role-Based Access Control) roles to users and grant them access to AKS1. Why not the other options? (a) Modify Organization relationships settings – This is used for configuring external collaboration (B2B) but is not relevant to granting internal users access to AKS. (c) Recreate AKS1 – Recreating the cluster is unnecessary; the issue is with authentication, not the cluster itself. (d) Create a namespace – Namespaces are used for organizing workloads in Kubernetes but do not impact authentication or user access control.

You must resolve the licensing issue before attempting to assign the license again. What should you do?

From the Groups blade, invite the user accounts to a new group

From the Profile blade, modify the usage location

From the Directory role blade, modify the directory role

In Azure Active Directory (Azure AD), licensing issues often occur due to insufficient permissions to assign or manage licenses. Only users with the necessary directory roles can assign licenses to other users. If an administrator or user is unable to assign a license, it may be because they lack the required administrative privileges. By modifying the directory role in the Directory role blade, you can assign a higher privilege role (such as License Administrator or Global Administrator) to the user, enabling them to resolve licensing issues and assign licenses again. Why not the other options? (a) Invite the user accounts to a new group – While groups can be used for license assignment, they do not resolve licensing issues caused by insufficient permissions. (b) Modify the usage location – Usage location is required for license assignment, but if the issue is related to permissions, changing the usage location won’t help.

Your company’s Azure subscription includes Azure virtual machines (VMs) that run Windows Server 2016. One of the VMs is backed up daily using Azure Backup Instant Restore. When the VM becomes infected with data encrypting ransomware, you are required to restore the VM. Which of the following actions should you take?

You should restore the VM after deleting the infected VM

You should restore the VM to any VM within the company’s subscription

You should restore the VM to a new Azure VM

You should restore the VM to an on-premises Windows device

In the event of a ransomware infection on an Azure VM that is backed up using Azure Backup Instant Restore, it’s generally recommended to restore the VM to a new Azure VM. This ensures that you are not using the compromised VM, and you can have confidence that the new VM is clean and unaffected by the ransomware. When a virtual machine (VM) is infected with data-encrypting ransomware, it is crucial to restore the system from a clean backup to prevent reinfection. Azure Backup Instant Restore allows you to recover a VM from a previous snapshot before it was compromised. The best approach is to restore the VM to a new Azure VM rather than overwriting the infected one. This ensures that: The infected VM is isolated to prevent the ransomware from spreading. A clean, uncompromised VM is restored from the latest safe backup. You can verify and test the restored VM before putting it back into production. Why not the other options? (a) Restore after deleting the infected VM – Deleting the infected VM before restoring is not recommended because you may need it for forensic analysis to determine how the ransomware entered. (b) Restore to any VM within the company’s subscription – Restoring to an existing VM is risky because it may already be compromised or have different configurations. A fresh VM ensures a clean environment. (d) Restore to an on-premise Windows device – Azure Backup is designed for cloud recovery, and restoring to an on-premises device is not a standard approach for VM recovery.

You have an Azure subscription named Subscription1. Subscription1 contains two Azure virtual machines named VM1 and VM2. VM1 and VM2 run Windows Server 2016. VM1 is backed up daily by Azure Backup without using the Azure Backup agent. VM1 is affected by ransomware that encrypts data. You need to restore the latest backup of VM1. To which location can you restore the backup? NOTE: Each correct selection is worth one point You ca perform a ie recovery of VM T1o:

VM1 only

VM1 or a new Azure Virtual Machine only

VM1 and VM2 only

A new Azure Virtual Machine only

Any Windows computer that has internet connectivity

Azure Backup provides two main types of backups for virtual machines: Backup with Azure Backup Agent – Used for file and folder-level recovery. Backup without Azure Backup Agent (Azure VM Backup) – Captures the entire VM for disaster recovery. Since VM1 is backed up without using the Azure Backup agent, it means Azure Backup Snapshot is used, which allows for File Recovery instead of full VM recovery. With Azure Backup’s File Recovery feature, you can: Mount the recovery point as a network drive Copy files from the backup to any Windows computer that has an internet connection This means you can recover files from VM1’s backup to any Windows machine that is connected to the internet, including VM1, VM2, or even an on-premises machine. Why not the other options? VM1 only ? – You are not restricted to restoring files only to VM1. VM1 or a new Azure Virtual Machine only ? – You can restore files to any Windows computer, not just Azure VMs. VM1 and VM2 only ? – You are not limited to these VMs; file recovery can be done on any Windows machine. A new Azure Virtual Machine only ? – While you can restore to a new VM, you are not limited to that.

You have an Azure subscription named Subscription1. Subscription1 contains two Azure virtual machines named VM1 and VM2. VM1 and VM2 run Windows Server 2016. VM1 is backed up daily by Azure Backup without using the Azure Backup agent. VM1 is affected by ransomware that encrypts data. You need to restore the latest backup of VM1. To which location can you restore the backup? NOTE: Each correct selection is worth one point You restore VM1 to :

VM1 only

VM1 or a new Azure Virtual Machine only

VM1 and VM2 only

A new Azure Virtual Machine only

Any Windows computer that has internet connectivity

Since VM1 is backed up daily by Azure Backup without using the Azure Backup agent, this means the backup is a VM-level backup using Azure’s native Azure VM Backup service. Azure VM Backup takes snapshots of the entire VM, allowing for: Restoring the VM in-place (VM1) – This replaces the existing VM with the backup version. Restoring the VM as a new Azure Virtual Machine – This creates a separate VM from the backup while keeping the infected VM intact for forensic analysis. Why not the other options? VM1 only ? – While you can restore to VM1, you also have the option to restore to a new VM. VM1 and VM2 only ? – You cannot restore a backup of VM1 directly to VM2 because VM backups are specific to the original VM. A new Azure Virtual Machine only ? – You can restore to a new VM, but you also have the option to restore in-place to VM1. Any Windows computer that has internet connectivity ? – This applies only to file-level recovery, but Azure VM Backup restores full VMs, not individual files, so this is incorrect.

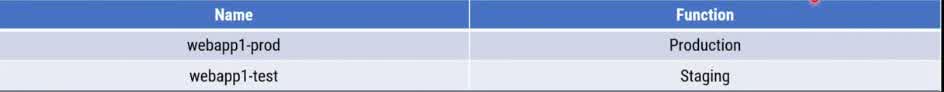

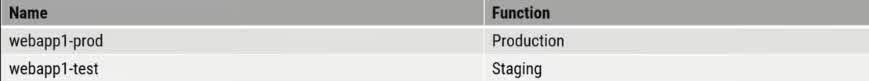

You have an Azure web app named App1. App1 has the deployment slots shown in the following table: In webapp1-test, you test several changes to App1. You back up App1. You swap webapp1-test for webapp1-prod and discover that App1 is experiencing performance issues. You need to revert to the previous version of App1 as quickly as possible. What should you do?

Redeploy App1

Swap the slots

Clone Appl

Restore the backup of App1

Azure App Service provides deployment slots that allow you to test changes in a staging environment before pushing them to production. In this scenario, you: Tested changes in the staging slot (webapp1-test). Swapped the staging slot (webapp1-test) with the production slot (webapp1-prod), making the new version live. Discovered performance issues after the swap. Since deployment slots retain the previous state, you can quickly swap back to restore the previous version of App1 in production without redeploying. Why is swapping the slots the fastest solution? When you swap slots, Azure maintains the previous app version in the staging slot. Swapping again will immediately revert the changes, bringing back the old production version that was previously in webapp1-prod. This minimizes downtime and avoids the need for a full redeployment or backup restoration. Why not the other options? (a) Redeploy App1 ? – This would take longer because you need to find and redeploy the previous version manually. (c) Clone App1 ? – Cloning creates a new instance but does not restore the previous version immediately. (d) Restore the backup of App1 ? – While restoring a backup could work, it is a slower process compared to simply swapping the slots.

You have two subscriptions named Subscription and Subscription 2. Each subscription is associated to a different Azure AD tenant + Subscription contains a virtual network named VNet1. + VNetl contains an Azure virtual machine named VM1 and has an IP address space of 10.0.0.0/16 + Subscription2 contains a virtual network named VNet2. + VNet2 contains an Azure virtual machine named VM2 and has an IP address space of 10.10.0.0/24 You need to connect VNet1 to VNet2. What should you do first?

Move VM1 to Subscription 2

Move VNet1 to Subscription2

Modify the IP address space of VNet2

Provision virtual network gateways